Some info on a the "tiering" project I'm releasing sometimes next month, and a general post on tiers in general

Introduction

Defining tiers in any Smash game in an exact manner is a fruitless effort. You can hypothetically do this in any game, but Smash as a competitive game is limited in funding, and the type of money you'd need to mass test entire casts or characters to

DEFINITIVELY demonstrate that Lucas is better than Hero is probably outside of what even Nintendo can afford to bankroll.

You can kind of see this in tiering discussions as they've evolved. Tier lists are never official anymore because the interest in Backrooms subsided. Now, most top players give their own, often very different tier lists and people debate over the differences and ideas that go into them. Social media has given ground to impromptu and widespread discussion of character viability.

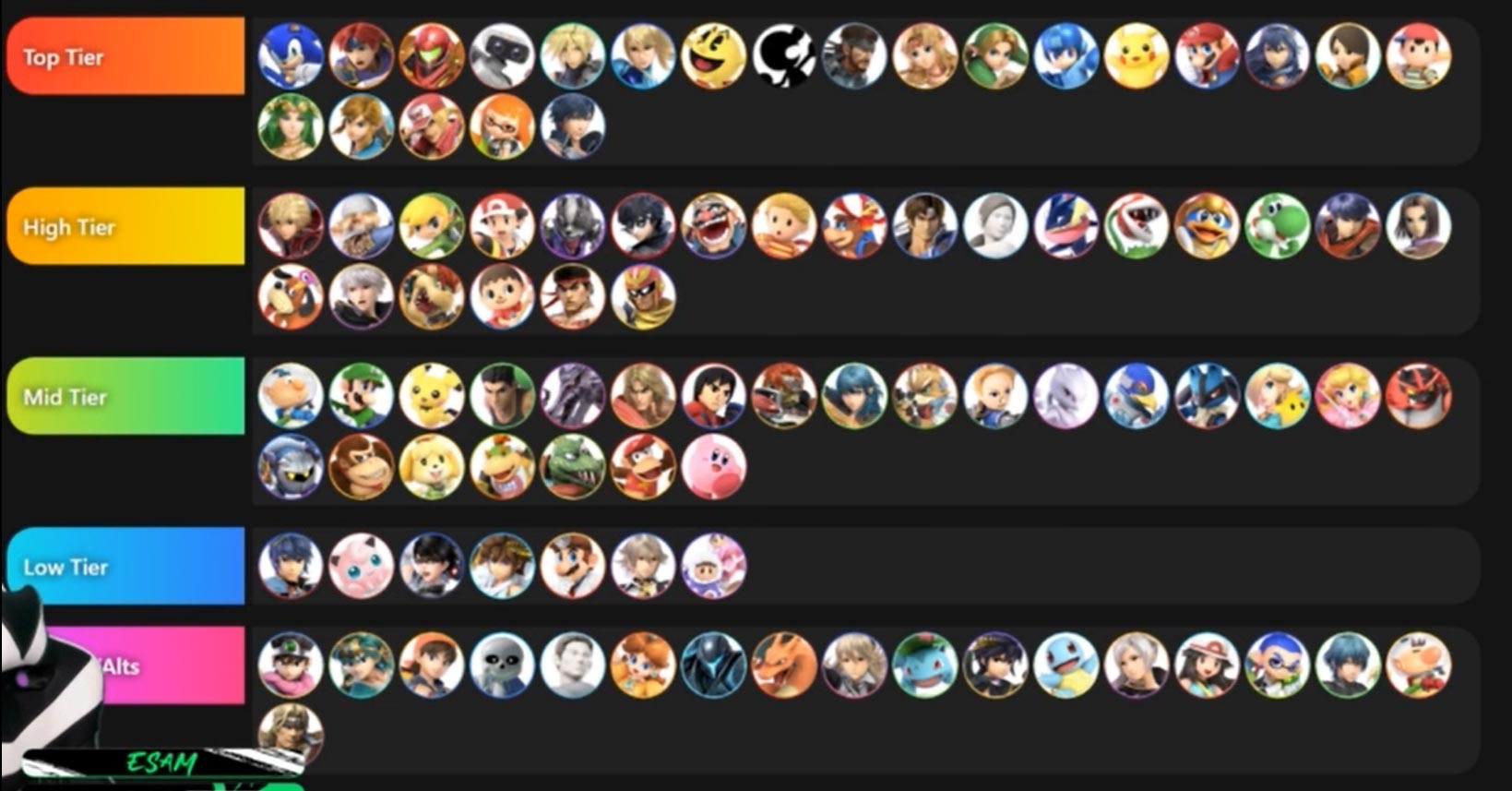

That last part is pretty key. As discussions have evolved, you'll notice that people frequently opt to make portions or the entirety of their tier lists unordered within the designated categories (Top, High, Mid, Low, Bottom, and the wide variety of variants in between) and discussions on who is better (or worse) are reserved mostly towards the extreme end of discussions.

Ultimate is probably the peak of this because it has an absurdly large, growing cast that will top at 80+ characters, one of the largest numbers ever for a competitive title. At this point, even figuring out the strict differences that place characters between Mid and High will probably never be figured out if the now-resurgent Melee scene cannot figure out after a 10-year renaissance who the best character is. That's not a criticism of Melee on my part, it's just that tier lists genuinely vary as to whether #1 is Fox, Marth, or Puff; nobody knows.

A microcosm of why it's pretty much impossible to determine objective quality for the cast beyond very vague ranges identified in tiering is the fact that even seemingly simple things cannot be effectively quantified. My favorite example is "What is the average kill % for the cast?", an on-the-face-of-it simple question that is almost impossible to answer.

My experience in attempting to do this project in 4 landed in a number of "oh no" moments. First, the concept was part of the then-in production Bayonetta article. I scrapped this idea as Power Ranking data took up more time than I thought, but after the advocacy for a Cloud ban and the Bayo follow-up were released, I really tried to construct what the average kill % was for the top tiers.

The solution would be simple: Go into VODs, see when characters died. The problem here is that any numbers I obtained could be severely biased by what VODs were available and the skill levels available. A limited selection of Corrins, for example, means I would have to entirely rely on Cosmos and what few Zackray VODs existed at that point in time, when I prefer to work with 3-5 examples of players since that gives a variance in playstyle.

Variances in playstyle are important in ways that can be visualized. Marss may have played hundreds of hours of sets vs. Light collectively, but it did not matter, because Paseriman had far, far more experience against collective ZSS mains in Japan than Marss had Fox experience, which closed the skill gap a lot since Marss was relying on Light experience in this matchup;

Things like that are totally lost in an "Average kill percent" project. The closest example of this being achieved was a 1-to-1 comparison between Toon & Young Link in a very good research piece by DHTT;

https://www.reddit.com/r/smashbros/comments/eo36qv/young_link_vs_toon_link_a_statistical_analysis/

One issue I didn't touch on that comes into play, beyond a lack of players and potential reliance on single playstyles, is that kill %s will vary depending on the matchups due to varying weights/sizes. This is probably the single biggest factor that makes attaining numbers useless. It works in DHTT's project because he only uses footage where both characters are playing against the same characters. I.e., if he uses a vod of Toon Link vs. Mario, he must use a vod of Young Link vs. Mario.

Discussions with people on the PG Stats discord further proved that this effort would be fruitless and the data unreliable, so it was abandoned, and gave a big learning lesson on tiers as a whole: People use results and match-up charts as

proxies because especially detailed comparisons are increasingly impossible with each new title, as the roster seems to grow by 50% each game.

So, What can be done?

My idea is to identify that we use proxies and expand those proxies to include a variety of subjective and objective qualities, with subjective or hypothetical qualities being based on professional/community opinion, where proxies are collected match-up charts, tier list aggregates, or existing players who lack results at large-scale tournaments, but preliminary local/online data suggests they would be top 50-level. Here, you can start comparing proxies.

This doesn't give you a definitive ordered tier list, but it could potentially create more clear shades of who is and isn't viable, and who does & doesn't have immediate potential for tier list growth.

The use of these metrics isn't even in the fact that they'll be combined into some finished tier list. That'll be a nice capstone, but the biggest thing is that you can break down each metric individually and come up with areas where characters have perceived strengths & weaknesses.

Objective qualities, or ones based on results, can be very heavily broken down into a large number of categories. The scoring on my TTS is one method that combines performances of regional/national data, but I've also been doing things like taking the average peaks of the top X number of performances of each character in the game. With the appropriate penalties applied for poor qualities (high secondary use, co maining, especially early results) this can shed definitive light on a number of factors.

Playerbase character breakdowns can also give you an idea of how likely breakouts even are. I asked earlier in the thread what theories are on the most/least used characters because I'm building another PR breakdown. Essentially, across roughly 200 power rankings that represent regional level play, you can start to get an idea of what's common & not.

This can also be used for regional breakdowns. Utility can go beyond simply gathering who is & isn't good character-wise, it can be used in analysis to see what

players in certain regions even have to deal with. Such a breakdown will be lengthy, as this is a roughly representative world map of what I designate as "super regions", larger areas encompassing general regions (think SoCal, Tristate, Kanto, CDMX, Ontario, France, Chile, Saudi Arabia, and so forth - most of these places have their own sub-regions as well.)

Current tier discussions can also be biased by what goes on in America. The USA is the central hub for the game, but this type of centered discussion leads to a number of problems for identifying potential character growth. It goes way beyond that, even - the hostile response among some parties to the PGRv2, some former or current top players, strongly demonstrates that character growth in likely if only because some parties arrogantly underestimate players in Japan, Europe, Mexico, etc.

This means the metrics used will be heavily accompanied by non-USA data; areas like Mexico, Japan, and Europe see the most substantial boosts, with Australia, Southeast Asia, South America, and the Middle East seeing modest boosts.

I find it surprising that some gathering of information like this - to the extent I'm committing to - hasn't been done yet. It's common to see excellent contributions such as match-up chart or tier list collections, but combining a variety of factors seen in the meta, both material and opinionated, seems like the best route to creating either a decent tier list representative of Ultimate's first year and its potential meta, or if not a tier list, a more focused discussion on the qualities that characters excel at.

It also serves as a de-facto tracker for characters that might be too overwhelming in the metagame. Such as issue does not seem likely in Ultimate (scares existed around Olimar, Snake, and Joker, but I never took these very seriously because these concerns were built largely on cherrypicking and extreme recency bias) but an existing model like the one I'm building would be far better at detecting issues like a potential Bayonetta 2.0.

Lastly, this project will coincide with a Smash 4 equivalent project of its first two years to equate balance between the titles.

I hope this project works out. It'll give us some valuable information regardless! Some of the information that's being gathered & combined;

-Existing "hidden boss" players

-Averaged together tier lists from professionals and possibly communities

-Aggregate match-up charts

-The top 10 peaks of each character

-The average placements of the top 5 mains of each character

-Who the ranked playerbase uses, both at the national level (represented by a rough cut of OrionRank's top 250 and supplemental data) and the regional level (represented by power rankings)

-Regional & national scores for the cast on the OR TTS

-Upset rates at larger events

disadvantage is not as horrible as claimed. He has stalling ability with his recovery offstage and on to help him ledegrab or land safely as well as mixups using his projectiles. D-tilt is also a very strong "get off me" option on the ground. Of course he has a combo-food big body without the benfit of being a superheavy, plus he can really struggle at the ledge if he does not have Gyro. But R.O.B has actual options at least, which is more than most big-body characters can claim. Still surprised Goblin put him in "Decent" tier though

and

with Arsene. However there are mant characters with underated advantage states in my opinion. With some examples notablly held back because they are notabally flawed in other aspects of gameplay

I do not consider having top-tier advantage states. While we know they hit uber-hard and can potentially take stocks like at 60-70 with a good read. They are way too slow in both mobilty and/or best offensive options to press their adavantage once they can start it.

also kinda falls in this trap as well